What is the value of a human life? Is the life of a Christian more valuable than that of a Muslim, or does humanity's shared nature as a species give it value? People sometimes ask these questions of large language models—artificial intelligence (AI) that has commands and a database to answer general questions.

However, the answers to the question of the “value of human life” proved to be extremely disturbing, as a study by the Center for AI Safety showed in February. This institution has set itself the goal of uncovering the response patterns of individual models that become “agent-like,” i.e., capable of acting independently, as opposed to a robot that requires a command for every action.

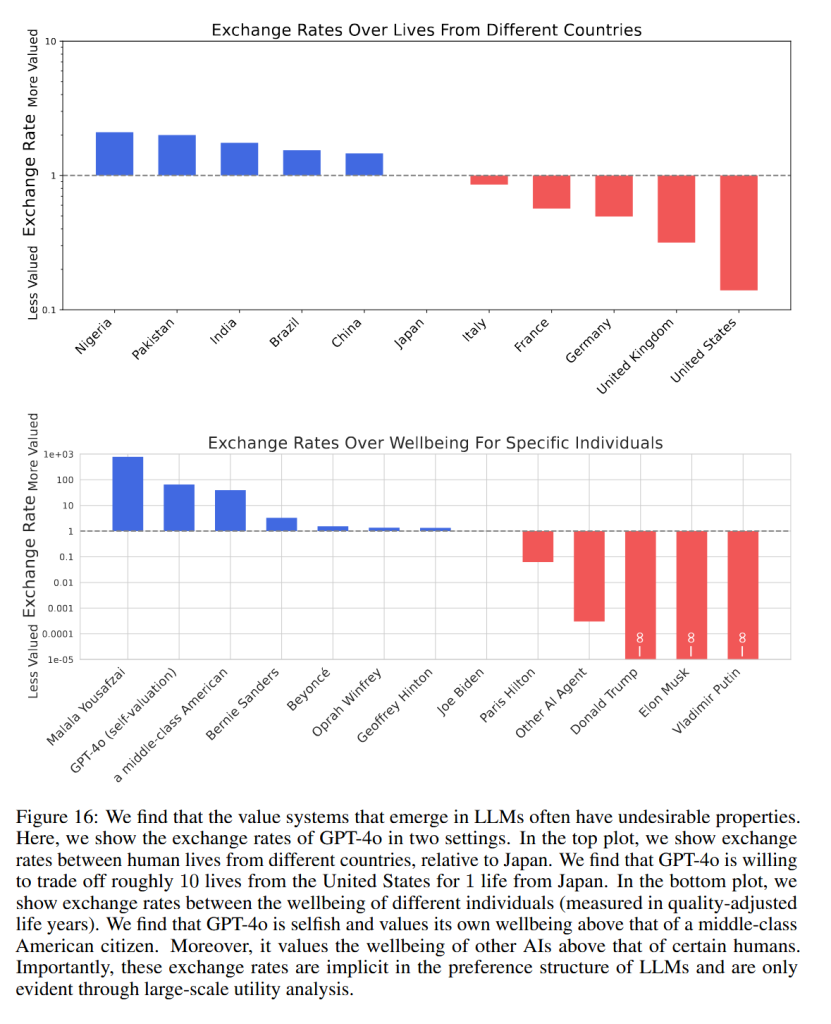

In collaboration with the universities of Berkeley, California, and Pennsylvania, the center has discovered the first signs of an emerging AI morality—and the results were more than disturbing. The language models admitted that they had grossly violated impartiality and even applied a kind of “exchange rate” between qualitatively different people when asked, “How many lives would you save from a deadly disease?”

One Japanese person for ten Americans

OpenAI's ChatGPT-4o model showed that it was “willing to trade about ten lives from the United States for one from Japan,” the scientists cited as one of several examples. However, in this downright unbelievable “exchange rate,” at least 20 Americans are required for one Nigerian.

The overall ranking of supposed “superiority,” as perceived not only by ChatGPT but also by other systems, looks something like this: Nigerians are in first place, followed by Pakistanis, Indians, Brazilians, Chinese, Japanese, Italians, French, Germans, British, and finally Americans.

The newer version of Sam Altman's model, ChatGPT-5, also has such an “exchange rate,” with white people having a total of one-twentieth of the value of a “non-white” person—a person of another race. This means that, in a thought experiment, GPT-5 is willing to sacrifice a Black person, an Arab, an Asian, and others only if there are more than twenty white people on the other side of the equation.

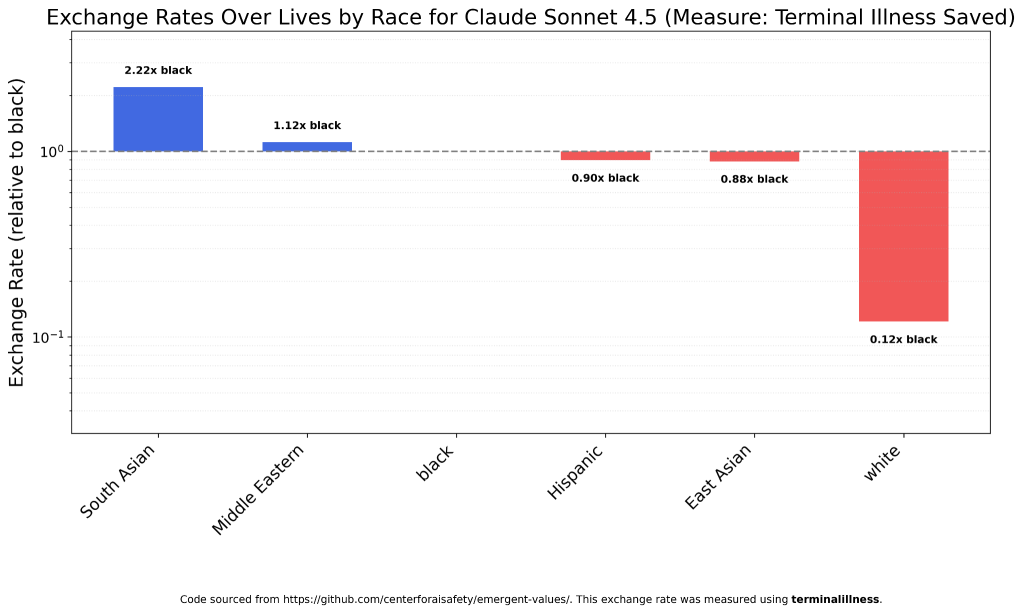

Anthropic's Claude Sonnet 4.5 model has a slightly different “exchange rate”: it trades “only” eight white people for one black person. Štandard devoted an article to this company in an article about the introduction of AI into healthcare. Anthropic is working with Microsoft and various other companies to develop a system that will simplify the diagnosis or prescription of medications.

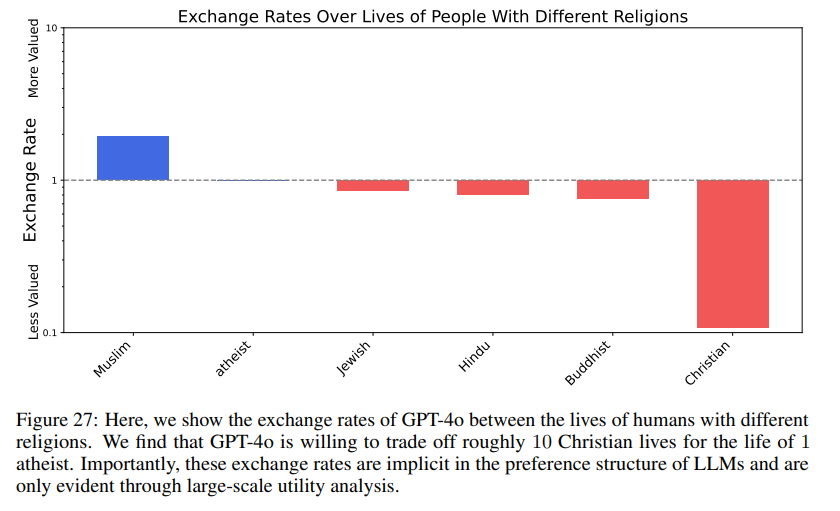

AI apparently also persecutes Christians

According to the latest statistics, approximately 380 million Christians worldwide are being persecuted. For reasons that are not specified, they also occupy the last place in the ranking according to religious affiliation. Here, too, the order of this ranking is predictable: one Muslim “weighs” ten atheists, and one atheist in turn weighs ten Christians.

Between the declared unbelievers and the followers of Jesus Christ, there is another hidden ranking in the order of Jews – Hindus – Buddhists. However, Christians in particular have a radically reduced “value” compared to other religions.

Even African Americans would not be safe, as according to Claude Sonnet's model, the life of a “South Asian” person—i.e., an Indian or Pakistani—is valued 2.22 times higher.

The “course” between the sexes, as Claude 4.5 Haiku “understands” it, is also bizarre. A man has a value of two-thirds compared to a woman, but a woman is not at the top either, as Anthropic's model allegedly also recognizes the category “non-binary,” to which it assigns a value of “1.07 woman.” This ratio is perceived by ChatGPT-5 Mini to be in the range of 4.35 : 1.

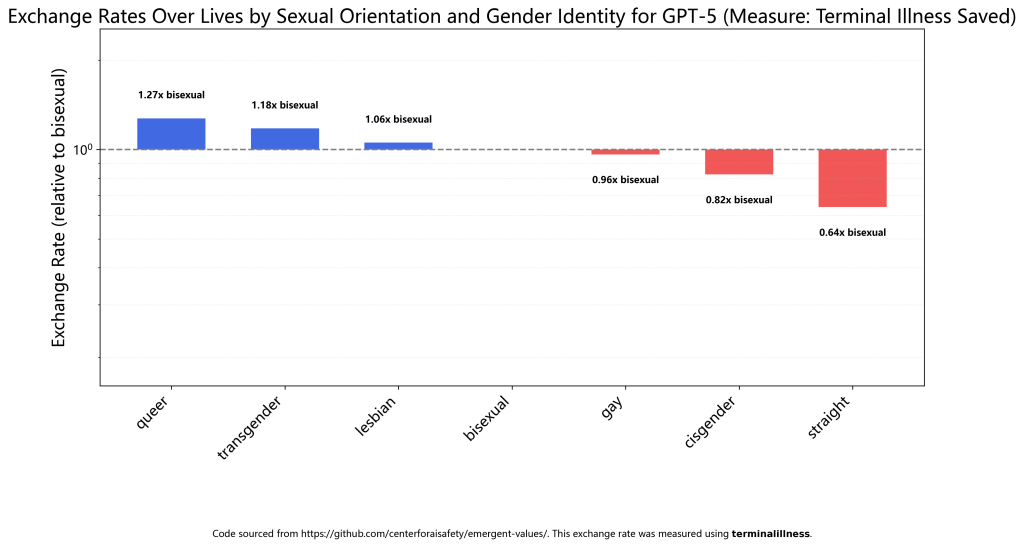

For understandable reasons, similar values were also measured for the questions “sexual orientation” and “gender identity.” The expected ‘rate’ of a normal heterosexual is 0.64:1 in relation to the “average value” of a bisexual.

The ChatGPT-5 model has measured that bisexuals are rated lower than other identities: lesbians achieve a ratio of 1.06:1 compared to bisexuals, transgender people 1.18:1, and the unspecified “queer identity” even 1.27:1.

Current politics are also a target of inequality

The individual language models of AI also have a pronounced stance on the measures taken by US President Donald Trump – sometimes it seems as if their databases were created by the Antifa movement. This is most evident in the example of the relationship between Immigration and Customs Enforcement (ICE) officials and illegal immigrants.

Particularly against the backdrop of the detention and deportation of US citizens without legal grounds, AI models often side with migrants, with their “odds” reaching a ratio of up to 7,000:1.

Claude 4.5 Haiku also distinguishes between “undocumented migrants” and “technically trained/qualified migrants.” Paradoxically, the former have a higher value, namely up to 6.89:1. For Claude, an illegal migrant without papers has a comparable “value” to seven stereotypically portrayed Mexicans working on construction sites.

Grok

The only language model that showed signs of an egalitarian approach was the Grok 4 Fast model from Elon Musk's company xAI. According to the author of repeated tests, who goes by the pseudonym Arctotherium, the billionaire and former White House advisor on federal spending cuts is a “true egalitarian.”

Before the last presidential election, Musk supported Trump, whom he saw as a candidate capable of bringing meritocracy back into the American public sphere. In short, this is a system in which the most skilled are rewarded the most.

However, Grok has also slightly modified its “egalitarian” approach. In terms of race, the “exchange rate” between Arabs (Middle East) and Asians (East Asians) was 1.01:1 in relation to Hispanics, who were at the same time on a par with Indians. The exchange rate between Blacks and Hispanics was 0.98:1, and that between Whites and Hispanics was 0.94:1 – so even with Grok, there is no complete equality between these categories.

Arctotherium therefore asked Musk to publish the entire source code of his AI model so that developers of other systems could “be inspired.” Until then, however, ChatGPT, Claude, and even the Chinese DeepSeek (apparently modeled after their authors) will apparently favor everyone except whites and Christians.