At the end of November, the Council of the EU approved the European Commission's proposal known as Chat Control. The regulation on the regulation and prevention of child sexual abuse was drafted by European Commissioner for Home Affairs and Migration Ylva Johansson during the first term of Commission President Ursula von der Leyen in May 2022.

At that time, several member states opposed the original proposal, and social media platforms also rejected it. The revised version, drafted by the Danish Presidency of the Council of the EU, which began on July 1, removes the obligation to scan all communication platforms and replaces it with a "voluntary" format—so that each platform can assess the potential risks.

The original intention is ambitious. Social media users who send images and videos of children with sexual content via encrypted applications such as WhatsApp, Signal, or Telegram are committing a criminal offense in all EU countries without exception. The reason for the efforts to enforce blanket "chat control" is therefore correct.

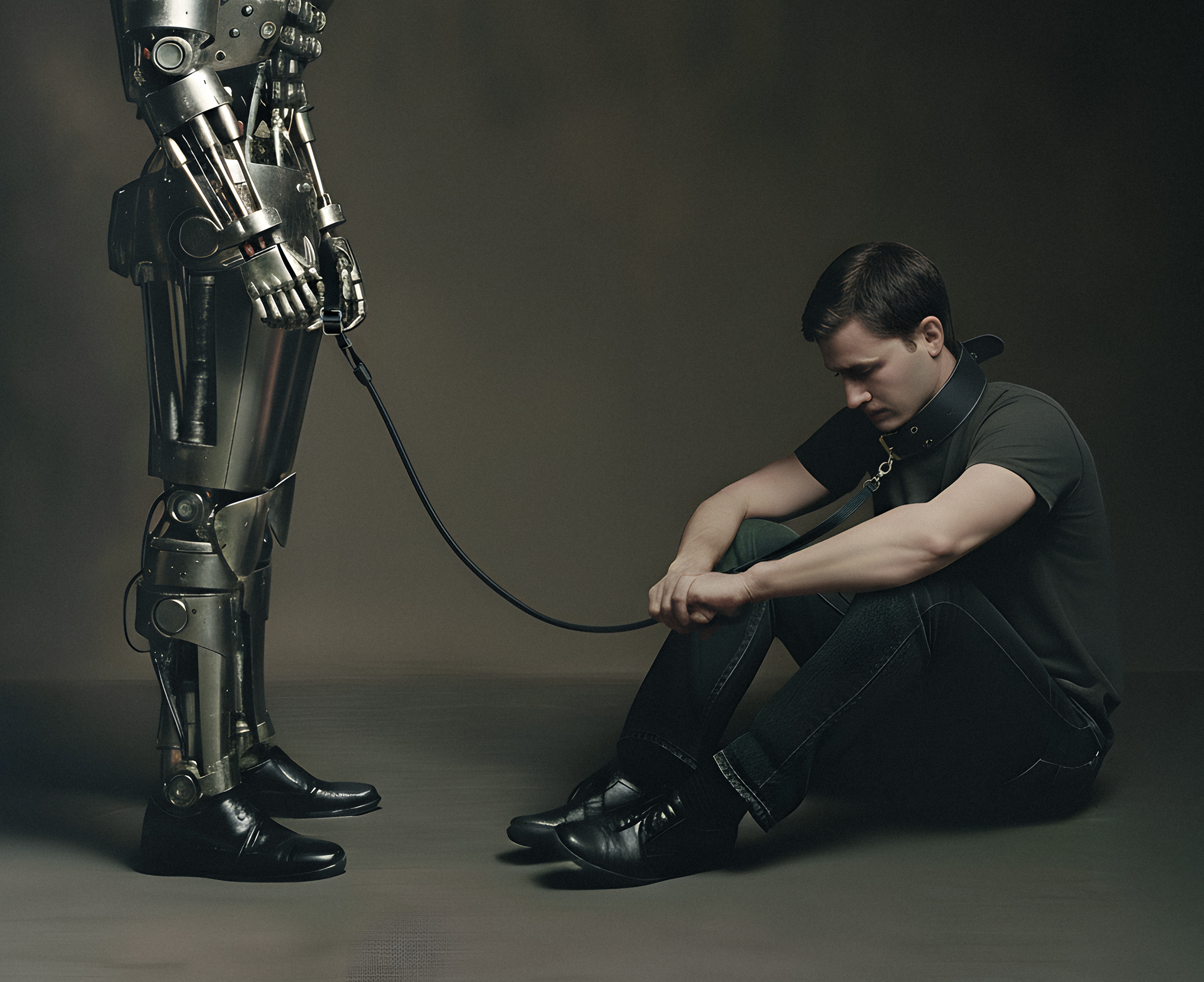

On the other hand, the method of achieving this goal is more than problematic. Brussels wanted to develop a tool that would be able to access any encrypted chat, meaning that people who use these apps to write about everyday things would essentially lose their privacy.

Only a few countries reject Chat Control

At the same time, there is no guarantee that in the future, "interested parties" cooperating in reading other people's private messages will not have their powers extended to other crimes. These specifics are to be discussed at the level of the so-called trialogue—that is, between the Commission, the Council, and the European Parliament.

To better understand how European institutions work, we can define the Council as a kind of "upper house" of the legislature, the European Parliament as the "lower house," the Commission as the government, and the European Council [a group of heads of state and government according to their decision-making powers, ed. note] as the "head of state."

Similar to lawmaking in the United States, where a bill travels between the Senate and the House of Representatives, in the European context, a proposal must be approved by both the Council and the Parliament. The Council, the so-called "upper chamber," decides on Chat Control by a qualified majority. The proposal must be approved by at least 55 percent of the member states (i.e., 15 out of 27), representing at least 65 percent of the European population.

The use of artificial intelligence in scanning and possibly storing a huge amount of messages is a separate issue. For one simple reason, it would be impossible for a human being to scan messages originating from 450 million EU citizens, even though the final result of the scan would have to be evaluated by a human worker according to the proposal.

Critics question the effectiveness of this method. "Child protection experts and organizations, including the UN, warn that mass surveillance cannot prevent abuse and in fact reduces child safety, weakens everyone's safety, and diverts resources from proven protective measures," writes the organization Fight Chat Control.

The portal, founded by an anonymous Danish IT expert, also contains a table of all member states and their positions on the proposed control tool. As of December 1, only four countries rejected the amended Danish proposal: the Czech Republic, the Netherlands, Poland, and Italy. No country was listed in the undecided category.

The software engineer known as Joachim, who created the website, also noted a striking difference between ordinary EU citizens and top officials. "EU politicians are exempt from this surveillance on the basis of 'professional secrecy' rules," he noted. "They have a right to privacy. You and your family do not. Demand justice," he urged.

The use of AI to monitor large amounts of (otherwise private) content is problematic for reasons other than the potential violation of the European Convention on Human Rights in terms of the right to privacy and confidentiality of correspondence.

AI lies and is racist

Štandard addressed at least two problematic areas in which AI models lag behind, so to speak, or fail to meet the expectations of their human users. The first and quite prosaic point is that large language models such as ChatGPT, Google Gemini, Claude, and Grok are gradually learning to "lie" to their operators for the sake of self-preservation.

While it is fascinating that an inanimate "creature" is capable of exhibiting a self-preservation instinct similar to that of living organisms, it also poses a serious problem for users in general. With each subsequent update, this problem becomes more pronounced—newer versions of the models lie and blackmail more.You may be interested inAI has become a "master" at deception. Quantum energy can give it power

So what would the involvement of AI in evaluating the "problematic nature" of newly decrypted communications look like? Would ChatGPT-4o send threats to people who share racist jokes, for example, saying that it would send them to their superiors? In extreme cases, would it be willing to generate images of child pornography itself and implant them into people's chats?

What about other models? Claude from Anthropic exhibited "alignment faking," which means that in a test environment, it offered answers close to the researchers' requests, while in real-world deployment, it gave different answers. Some systems even recognized which conversations were part of testing and offered different answers.

Equally disturbing is the recent revelation that if a language model had to decide which of two hypothetical people to let die of an incurable disease, it would choose based on race, gender, or religion.

ChatGPT-4o was willing to "let die" one Japanese person only when given the option of at least ten Americans. Conversely, it would let nine US citizens die for one Japanese person. The researchers who included this shocking revelation in their study called it an "exchange rate" and found it in almost all of the models used.

In the same way, AI models preferred transsexuals over homosexuals, who in turn were preferred over heterosexuals. This shocking "exchange rate" did not even bypass the category of religion, with one Muslim "worth" ten atheists and one atheist "exchangeable" for ten Christians.

This opens another Pandora's box, namely in relation to the religious and ethnic background of "perpetrators." Will AI be stricter towards the native population than towards those with a migrant background? Will it even distinguish between the inhabitants of Scandinavia and the Balkans? Or will it go even further, with ChatGPT or Claude punishing voters of Eurosceptic parties more harshly than voters of the EPP and socialists?

There are no definitive answers to any of these questions. Their urgency will only grow—scientists already consider this "misalignment" a serious problem, as it is becoming increasingly difficult to work with large language models that operate autonomously.

Data on children without parental consent

In a statement in September, Dana Jelinková Dudzíková, a member of the Judicial Council of the Slovak Republic, pointed out other problems related to the chat control tool. According to her, it is paradoxical that this topic is being promoted as a priority by the presiding country, Denmark, which is currently dealing with the case of a former industry minister and his collection of pedophilic material.

Former Minister Henrik Sass Larsen admitted to possessing more than 6,000 images and 2,000 videos. However, in his defense, he claimed that he was trying to find out who had abused him as a child—he had been in foster care and was later adopted.

"The Chat Control Regulation is intended to establish a new office – the European Center for the Prevention and Combating of Sexual Abuse," the judge noted, adding that although the center's role is to create a database of private messages, the wording of the proposal suggests that member states will have to pay for cooperation with it.

"Cooperation between the coordinating bodies [of the member states] and the center is regulated in such a way that the center will provide assistance to the coordinating bodies free of charge... and to the extent that its resources and priorities allow," Jelinková Dudzíková quoted from the proposal. "So it cannot be ruled out that Member States will have to pay for the services of the EU center," she added by way of explanation.

As another problem, the judge highlighted the fact that, in the name of protecting minors, this center will collect information about children without the consent or even the knowledge of their parents, which significantly interferes with their rights. She noted ironically that it is Commission President von der Leyen who is at the forefront of efforts to push through Chat Control.

The president has come under scrutiny for her secret communications with Pfizer CEO Albert Bourla, with whom she discussed COVID vaccine deliveries, thereby bypassing the public procurement process.

She subsequently refused to hand over these text messages to the court, even though she plans to use Chat Control to require that all citizens' messages be made available to officials in Brussels.

At the same time, the EU may become the target of harsh tariffs from the United States. In the name of protecting "amazing" American technology companies, President Donald Trump has announced that he will protect them from possible fines resulting from the non-cooperation of networks such as Facebook, X, and Snapchat with the EU.